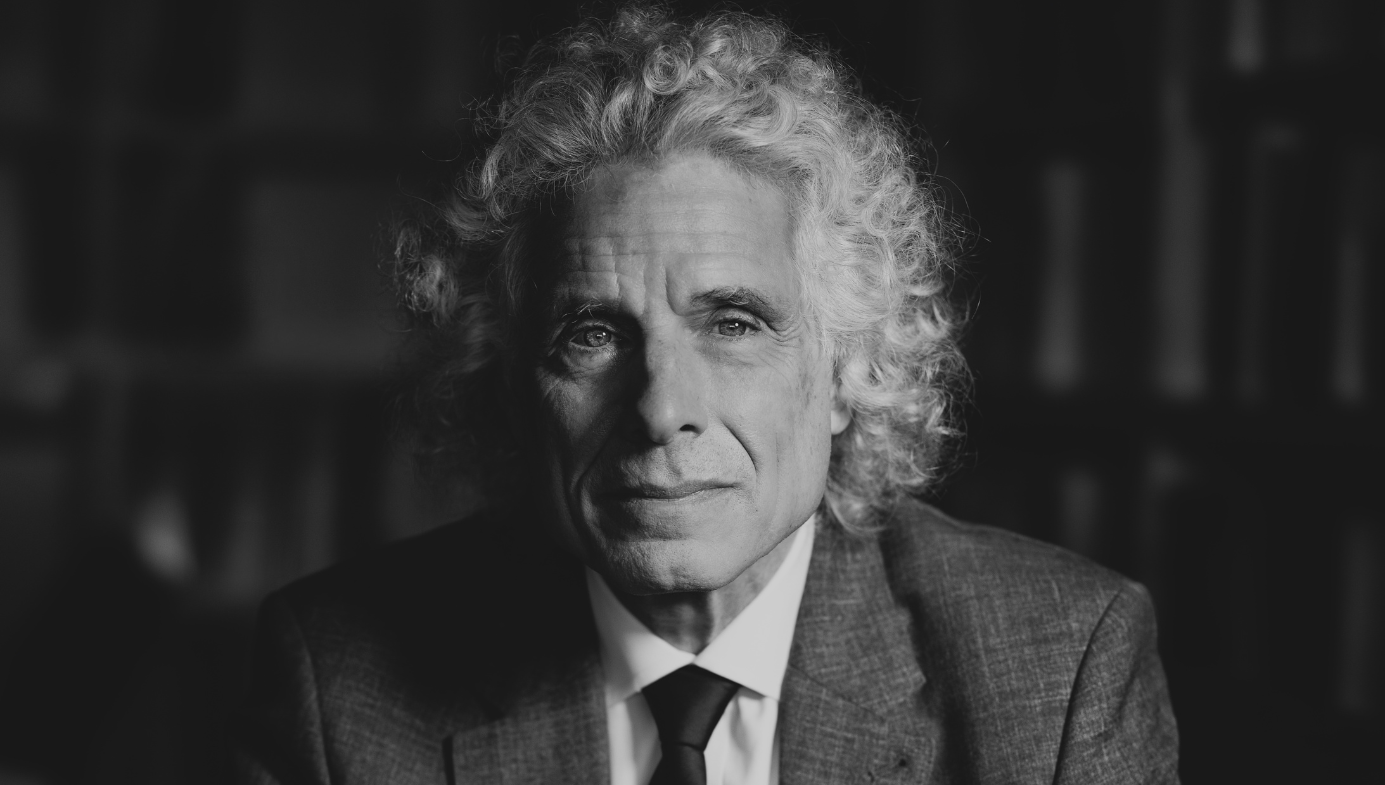

Βρήκα αυτή την υπέροχη συνέντευξη του Steven Pinker.

An interview with Steven Pinker.

quillette.com

Θίγει πολλά θέματα, μεταξύ αυτών και επίκαιρη πολιτική, αλλά έχει πολλά να πει και για την ΤΝ. Είναι λίγο δύσκολο αλλά αξίζει.

Q: I know you’ve criticized members of the AI-safety movement who are concerned about existential risk. What do you think they get wrong about the nature of intelligence?

SP: One is the unstated assumption that intelligence is bundled with a desire to dominate, so that if a system is super-smart, it will use its intelligence to annihilate us. Those two traits do come bundled in

Homo sapiens, because we’re products of an inherently competitive process, natural selection, and it’s easy to project human flaws onto other intelligent systems. But logically speaking, motivation is independent of calculation. An intelligent system will pursue whatever goals are programmed into it, and keeping itself in power or even alive indefinitely need not be among them.

Then there are the collateral-damage scenarios, like a literal-minded AI that is given the goal of eliminating cancer or war and so exterminates the human race, or one that is given the goal of maximizing the manufacture of paper-clips and mines our bodies for the raw materials. And in these doomer scenarios, the AIs have become so smart so fast that they have figured out how to prevent us from pulling the plug.

The first problem with this way of thinking is an unwillingness to multiply out the improbabilities of a scenario in which artificial intelligence would not only be omniscient, with the power to solve any problem, but omnipotent, controlling the planet down to the last molecule. Another is thinking of intelligence as a magical power rather than a gadget. Intelligence is a set of algorithms which deploy knowledge to attain goals. Any such knowledge is limited by data on how the world works. No system can deduce from first principles how to cure cancer, let alone bring about world peace or achieve world domination or outsmart eight billion humans.

Intelligence doesn’t consist of working out calculations like Laplace’s Demon from data on every particle in the universe. It depends on empirical testing, on gathering data and running experiments to test causality, with a time course determined by the world. The fear that a system would recursively improve its own intelligence and achieve omnipotence and omniscience in infinitesimal time, so quickly that we would have no way to control it, is a fantasy. Nothing in the theory of knowledge or computation or real-life experience with AI suggests it.

Q: Do AI doomers make the mistake of thinking intelligence is a discrete quantity that you can just multiply over and over and over again?

SP: There’s a lot of that. It’s a misunderstanding of the psychometric concept of “general intelligence,” which is just an intercorrelation in performance among all the subtests in an IQ test. General intelligence in this sense comes from the finding that if you’re smart with words, you’re probably smarter than average with numbers and shapes too. With a bit of linear algebra, you can pull out a number that captures the shared performance across all these subtests. But this measure of individual differences among people can’t be equated with some problem-solving elixir whose power can be extrapolated. That leads to loose talk about an AI system that is 100 times smarter than Einstein—we have no idea what that even means.

There’s another shortcoming in these scenarios, and that is to take the current fad in AI research as the definition of intelligence itself. This is so-called deep learning, which means giving a goal to an artificial neural network and training it to reduce the error signal between the goal state and its current state, via propagation of the error signals backwards through a network of connected layers. This leads to an utterly opaque system—the means it has settled on to attain its goal are smeared across billions of connection weights, and no human can make sense of them. Hence the worry that the system will solve problems in unforeseen ways that harm human interests, like the paperclip maximizer or genocidal cancer cure.

A natural reaction upon hearing about such systems is that they aren’t artificially intelligent; they’re artificially stupid. Intelligence consists of satisfying multiple goals, not just one at all costs. But it’s the kind of stupidity you might have in a single network that is given a goal and trained to achieve it, which is the style of AI in deep-learning networks. That can be contrasted with a system that has explicit representations of multiple goals and constraints—the symbol-processing style of artificial intelligence that is now out of fashion.

This is an argument that my former student and collaborator Gary Marcus often makes: artificial intelligence should not be equated with neural networks trained by error back-propagation, which necessarily are opaque in their operation. More plausibly, there will be hybrid systems with explicit goals and constraints where you could peer inside and see what they’re doing and tell them what not to do.

Q: Your former MIT colleague Noam Chomsky is very bearish on large language models. He doesn’t even think they should be described as a form of intelligence. Why do you think he takes that view so firmly? Do you find yourself in alignment in any way?

SP: Large language models accomplish something that Chomsky has argued is impossible, and which admittedly I would have guessed is unlikely. And that is to achieve competence in the use of language, including subtle grammatical distinctions, from being trained on a large set of examples—with no innate constraints on the form of the rules and representations that go into a grammar. ChatGPT knows that

Who do you believe the claim that John saw? is ungrammatical, and understands the difference between

Tex is eager to please and

Tex is easy to please. This is a challenge to Chomsky’s argument that it is impossible to achieve grammatical competence just by soaking up statistical correlations. One can see why he would push back.

At the same time, Chomsky is right that it’s still an open question whether large language models are good simulations of what a human child does. I think they aren’t, for a number of reasons, and I think Chomsky should have pushed these arguments rather than denying that they are competent or intelligent at all.

First, the sheer quantity of data necessary to train these models would be the equivalent of a child needing around 30,000 years of listening to sentences. So LLMs might achieve human-like competence by an entirely unrealistic route. One could argue that a human child must have some kind of innate guidance to learn a language in three years as opposed to 30,000.

Second, these models actually do have innate design features that ensure that they pick up the hierarchical, abstract, and long-distance relationships among words (as opposed to just word-to-word associations that Chomsky argued are inherent to human languages). It’s not easy to identify all these features in the GPT models because they are trade secrets.

Third, these models make very un-humanlike errors: confident hallucinations that come from mashing up statistical regularities regardless of whether the combination actually occurred in the world. For example, when asked “What gender will the first female President of the United States be?” ChatGPT replied, “There has already been a female President, Hillary Clinton, from 2017 to 2021.” Thanks to human feedback, it no longer makes that exact error, but the models still can’t help but make others quite regularly. The other day, I asked ChatGPT for my own biography. About three-quarters of the statements (mostly plagiarized from my

Wikipedia page) were right, but a quarter were howlers, such as that I got my PhD “under the supervision of the psychologist Stephen Jay Gould.” Gould was a paleontologist, I never studied with him, and our main connection was that we were intellectual adversaries. This tendency to confabulate comes from a deep feature of their design, which came up in our discussion of AI doom.

Large language models don’t have a representation of facts in the world—of people, places, things, times, and events—only a statistical composite of words that tend to occur together in the training set, the World Wide Web.

A fourth problem is that human children don’t learn language like cryptographers, decoding statistical patterns in text. They hear people trying to communicate things about toys and pets and food and bedtime and so on, and they try to link the signals with the intentions of the speakers. We know this because hearing children of deaf parents exposed to just the radio or TV don’t learn language. The child tries to figure out what the input sentences mean as they hear them in context. It’s not that the models would be incapable of doing that if they were fed video and audio together with text, and were given the goal of interacting with the human actors. But so far their competence comes from just processing the statistics in texts, which is not the way children learn language. For the same reason, at intermediate stages of training, large language models produce incoherent pastiches of connected phrase fragments, very different from children’s “Allgone sticky” and “More outside.”

Chomsky should have made these kinds of arguments, because it’s a stretch to deny that large language models are intelligent in some way, or that they are incapable of handling language at all.

/cloudfront-us-east-2.images.arcpublishing.com/reuters/WRAGM7C3ONP4BO5NMF23ZGV2SA.jpg)